Archive for September, 2011

Consider this indefinite integral

![]()

Feed it to MATLAB’s symbolic toolbox:

int(1/sqrt(x*(2 - x))) ans = asin(x - 1)

Feed it to Mathematica 8.0.1:

Integrate[1/Sqrt[x (2 - x)], x] // InputForm (2*Sqrt[-2 + x]*Sqrt[x]*Log[Sqrt[-2 + x] + Sqrt[x]])/Sqrt[-((-2 + x)*x)]

Let x=1.2 in both results:

MATLAB's answer evaluates to 0.2014 Mathematica's answer evaluates to -1.36944 + 0.693147 I

Discuss!

So, you’re the proud owner of a new license for MATLAB’s parallel computing toolbox (PCT) and you are wondering how to get some bang for your buck as quickly as possible. Sure, you are going to learn about constructs such as parfor and spmd but that takes time and effort. Wouldn’t it be nice if you could speed up some of your MATLAB code simply by saying ‘Turn parallelisation on’?

It turns out that The Mathworks have been adding support for their parallel computing toolbox all over the place and all you have to do is switch it on (Assuming that you actually have the parallel computing toolbox of course). For example say you had the following call to fmincon (part of the optimisation toolbox) in your code

[x, fval] = fmincon(@objfun, x0, [], [], [], [], [], [], @confun,opts)

To turn on parallelisation across 2 cores just do

matlabpool 2;

opts = optimset('fmincon');

opts = optimset('UseParallel','always');

[x, fval] = fmincon(@objfun, x0, [], [], [], [], [], [], @confun,opts);

That wasn’t so hard was it? The speedup (if any) completely depends upon your particular optimization problem.

Why isn’t parallelisation turned on by default?

The next question that might occur to you is ‘Why doesn’t The Mathworks just turn parallelisation on by default?’ After all, although the above modification is straightforward, it does require you to know that this particular function supports parallel execution via the PCT. If you didn’t think to check then your code would be doomed to serial execution forever.

The simple answer to this question is ‘Sometimes the parallel version is slower‘. Take this serial code for example.

objfun = @(x)exp(x(1))*(4*x(1)^2+2*x(2)^2+4*x(1)*x(2)+2*x(2)+1); confun = @(x) deal( [1.5+x(1)*x(2)-x(1)-x(2); -x(1)*x(2)-10], [] ); tic; [x, fval] = fmincon(objfun, x0, [], [], [], [], [], [], confun); toc

On the machine I am currently sat at (quad core running MATLAB 2011a on Linux) this typically takes around 0.032 seconds to solve. With a problem that trivial my gut feeling is that we are not going to get much out of switching to parallel mode.

objfun = @(x)exp(x(1))*(4*x(1)^2+2*x(2)^2+4*x(1)*x(2)+2*x(2)+1);

confun = @(x) deal( [1.5+x(1)*x(2)-x(1)-x(2); -x(1)*x(2)-10],[] );

%only do this next line once. It opens two MATLAB workers

matlabpool 2;

opts = optimset('fmincon');

opts = optimset('UseParallel','always');

tic;

[x, fval] = fmincon(objfun, x0, [], [], [], [], [], [], confun,opts);

toc

Sure enough, this increases execution time dramatically to an average of 0.23 seconds on my machine. There is always a computational overhead that needs paying when you go parallel and if your problem is too trivial then this overhead costs more than the calculation itself.

So which functions support the Parallel Computing Toolbox?

I wanted a web-page that listed all functions that gain benefit from the Parallel Computing Toolbox but couldn’t find one. I found some documentation on specific toolboxes such as Parallel Statistics but nothing that covered all of MATLAB in one place. Here is my attempt at producing such a document. Feel free to contact me if I have missed anything out.

This covers MATLAB 2011b and is almost certainly incomplete. I’ve only covered toolboxes that I have access to and so some are missing. Please contact me if you have any extra information.

Bioinformatics Toolbox

Global Optimisation

- Various solvers use the PCT. See this part of the MATLAB documentation for details.

Image Processing

- blockproc

- Note that many Image Processing functions run in parallel even without the parallel computing toolbox. See my article Which MATLAB functions are Multicore Aware?

Optimisation Toolbox

Simulink

- Running parallel simulations

- You can increase the speed of diagram updates for models containing large model reference hierarchies by building referenced models that are configured in Accelerator mode in parallel whenever conditions allow. This is covered in the documentation.

Statistics Toolbox

- bootstrp

- bootci

- cordexch

- candexch

- crossval

- dcovary

- daugment

- growTrees

- jackknife

- lasso

- nnmf

- plsregress

- rowexch

- sequentialfs

- TreeBagger

Other articles about parallel computing in MATLAB from WalkingRandomly

- Which MATLAB functions are multicore aware? There are a ton of functions in MATLAB that take advantage of parallel processors automatically. No Parallel Computing Toolbox necessary.

- Parallel MATLAB with OpenMP mex files Want to parallelize your own functions without purchasing the PCT? Not afraid to get your hands dirty with C? Perhaps this option is for you.

- MATLAB GPU/CUDA Experiences and tutorials on my laptop – A series of articles where I look a GPU computing with CUDA on MATLAB

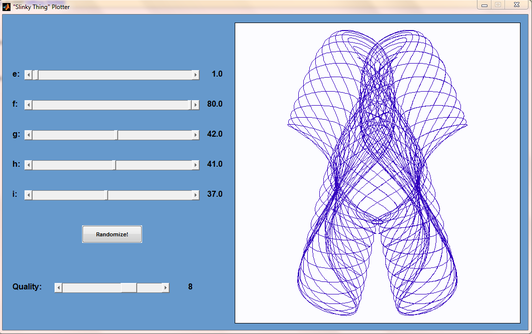

Matt Tearle has produced a MATLAB version of my Interactive Slinky Thing which, in turn, was originally inspired by a post by Sol over at Playing with Mathematica. Matt adatped the code from some earlier work he did and you can click on the image below to get it. Thanks Matt!

Welcome to August’s ‘A Month of Math Software’ where I look at everything from blog posts about math libraries through to the latest releases. Click here for earlier articles in the series.

Mathematical software packages

- Maxima, the venerable (it is based on a 1960s codebase called Macsyma) open source computer algebra package has been upgraded to version 5.25.1. See the change-log here. Download it from Sourceforge and check out a Maxima tutorial (how to plot direction fields for first order ODEs) right here at WalkingRandomly.

- SAGE is arguably the best general purpose mathematics package in the open source world and it saw an update to version 4.7.1 this month. See what got changed at http://www.sagemath.org/mirror/src/changelogs/sage-4.7.1.txt. I played with an old version a little while ago (see here and here) and am still offering a bounty for anyone who can add a certain piece of functionality to this product.

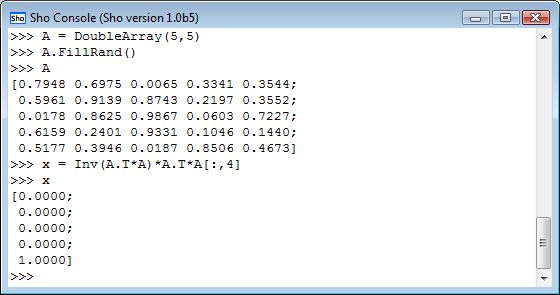

- Next up is a free (as in beer) package from Microsoft called Sho which saw an upgrade to version 2.0.5 this month. I’ll let Microsoft themselves tell you what it is:

“Sho is an interactive environment for data analysis and scientific computing that lets you seamlessly connect scripts (in IronPython) with compiled code (in .NET) to enable fast and flexible prototyping.”

It certainly looks very interesting with features such as direct integration with Azure (Microsoft’s cloud computing product), Optimization and loads more. Let me know what you think of it if you try it out.

- IBM’s SPSS Statistics is now at version 20. Take a look at what’s new in this commercial package at http://www-01.ibm.com/software/analytics/spss/statistics/resources/whats-new.html

Mathematical Software Libraries

- The AMD Core Math Library was upgraded to version 5.0 this month. I think the following is the complete change-log

- DGEMM and SGEMM have been tuned for AMD Family 15h processors. These take advantage of AVX and FMA-4 instructions to achieve high efficiency using either one or both threads of a compute unit.

- The Fortran code base for the library is compiled with AVX and FMA-4 flags to support the AMD Family 15h processors. This library will not run on processors that do not support AVX and FMA-4. The package includes legacy libraries with SSE/SSE2 instructions suitable for use on AMD Family 10h and AMD Family 0fh processors.

- New 2D and 3D real-to-complex FFT functions have been introduced. Included are samples demonstrating how to use the new functions.

- The L’Ecuyer, Whichmann-Hill, and Mersenne Twister random number generator have been updated to improve performance on all processor types.

- The vector math library dependency has been removed from the library, and libacml_mv has been removed from the build. These AMD math functions are available as a separate download from the AMD web page.

- While on the subject of the ACML, check out this interview about the library with Chip Freitag, one of its developers, that was recorded earlier this month.

- AMD have also released version 1.4 of the AMD Accelerated Parallel Processing Math Libraries (APPML). This is an OpenCL library aimed at GPUs. As far as I can tell, this is just a bug fix release and so there are no new routines available.

- Jack Dongarra et al have moved their linear algebra library for heterogeneous/hybrid architectures, MAGMA, from release candidate 5 to a full version 1.0 release. Roughly speaking, you can think of this project as LAPACK for CUDA GPUs (although this scope will probably widen in the future). I believe that it is used in products such as MATLAB’s parallel computing toolbox and Accelereyes’ Jacket (Correction: I’ve since learned that Jacket uses CULA and not MAGMA) among others.

- Intel have come up with a new hardware-based random number generator. Read about it at http://spectrum.ieee.org/computing/hardware/behind-intels-new-randomnumber-generator/ I wonder if hardware based generators will ever replace pseudo random number generators for simulation work?

- Finally, William Hart released a new version of Coopr back in July but I didn’t learn about it in time to get it into July’s edition of Month of Math Software. So, I’m telling you about it now (more accurately, I’m quoting William). ‘Coopr is a collection of Python software packages that supports a diverse set of optimization capabilities for formulating and analyzing optimization models’

That’s it for this month. Thanks to everyone who contacted me with news items, this series would be a lot more difficult to compile without you. If you have found something interesting in the world of mathematical software then feel free to let me know and I’ll include it in a future edition.